Best Practices for Using AWS Step Functions and Example Deployment with Terraform

When it comes to building and running distributed applications using visual workflows, AWS Step Functions is a service that stands out. It enables the coordination of various AWS services such as Amazon EC2, AWS Lambda, and Amazon SNS in a reliable and scalable manner. A project using Terraform and Step Functions can have many components. Therefore, it is essential to modularize and label wherever possible.

Understanding the recommended steps for using various tools to create Step Functions state machines is crucial. It’s recommended to use a combination of Workflow Studio and local development with Terraform. This workflow stipulates that you define all resources for your application within the same Terraform project. It also assumes that you will use Terraform for managing your AWS resources.

Common Uses for AWS Step Functions

- Microservices: Step Functions can be used to build and orchestrate microservices architectures, breaking down complex applications into smaller, independent services.

- Batch Processing: It can be used to process large volumes of data or perform tasks that need to be run periodically.

- Data Pipelines: It’s perfect for building and managing data pipelines, moving data between different sources and destinations in a reliable and scalable manner.

- Event-Driven Architectures: It can be used to build event-driven architectures, responding to specific events and triggering appropriate actions.

Step Function Best Practices:

- Optimize performance by using the right execution mode and service integration, implementing batch processing and retries, and monitoring usage with AWS CloudWatch.

- Enhance security using IAM roles for tasks, encrypting sensitive data, tracking changes with CloudTrail, and controlling access with resource-level permissions.

- Improve operational efficiency by passing large payloads using S3 ARN, monitoring the health of the Step Functions with Cloud Watch metrics, and avoiding state stuck with timeouts.

- Boost reliability by handling transient errors, ensuring tasks are idempotent, proactively responding to issues with CloudWatch alarms, and regularly testing workflows.

- Optimize costs by choosing between Standard or express workload based on needs, and monitoring and optimizing usage to reduce costs.

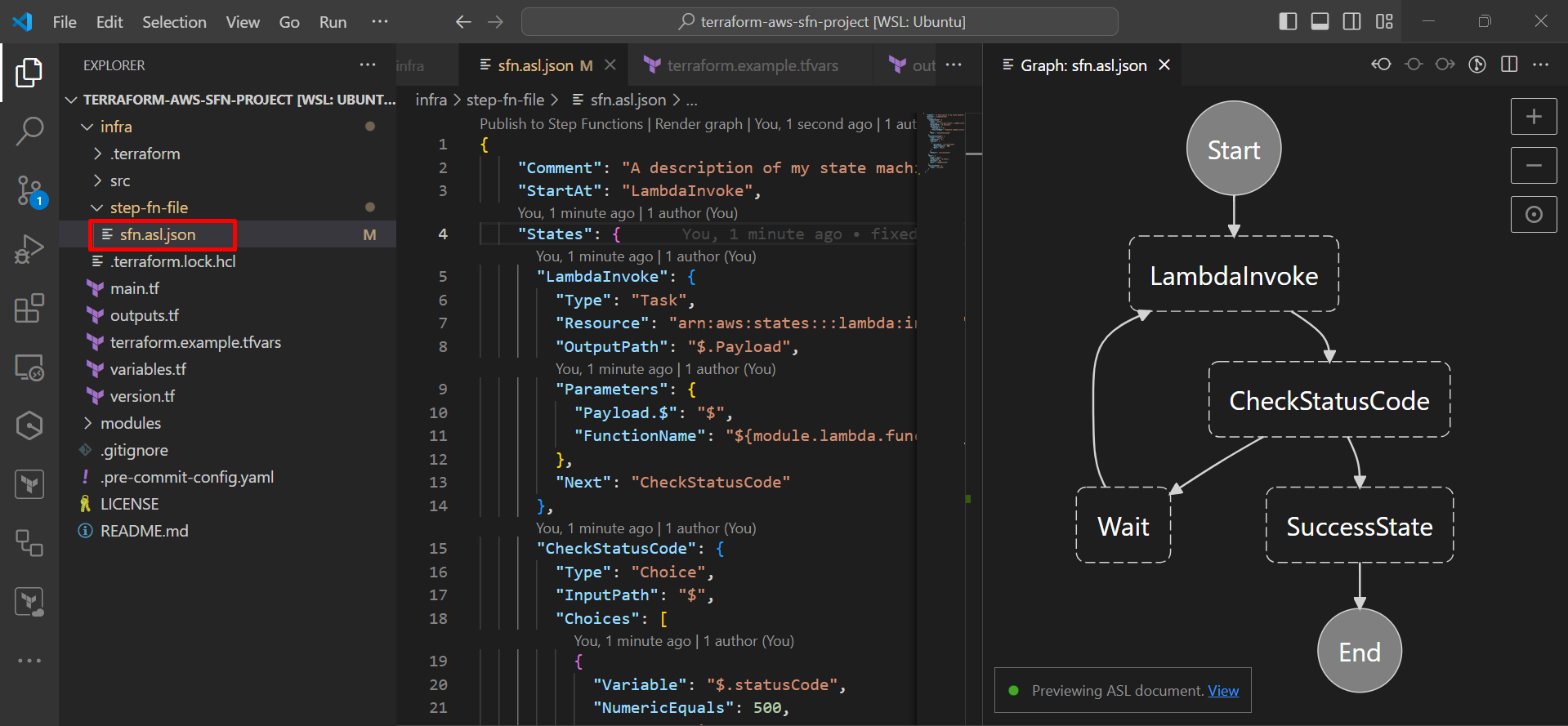

When developing step functions locally, you can see a visual depiction of the state machine using VS Code. It visually renders the state machine similar to Workflow Studio. This feature is part of the AWS Toolkit for VS Code. You can learn more about state machine integration with the AWS Toolkit for VS Code here. Below, you’ll find an example of a parameterized ASL file used in this project and its corresponding visualization in VS Code.

Example Step Function:

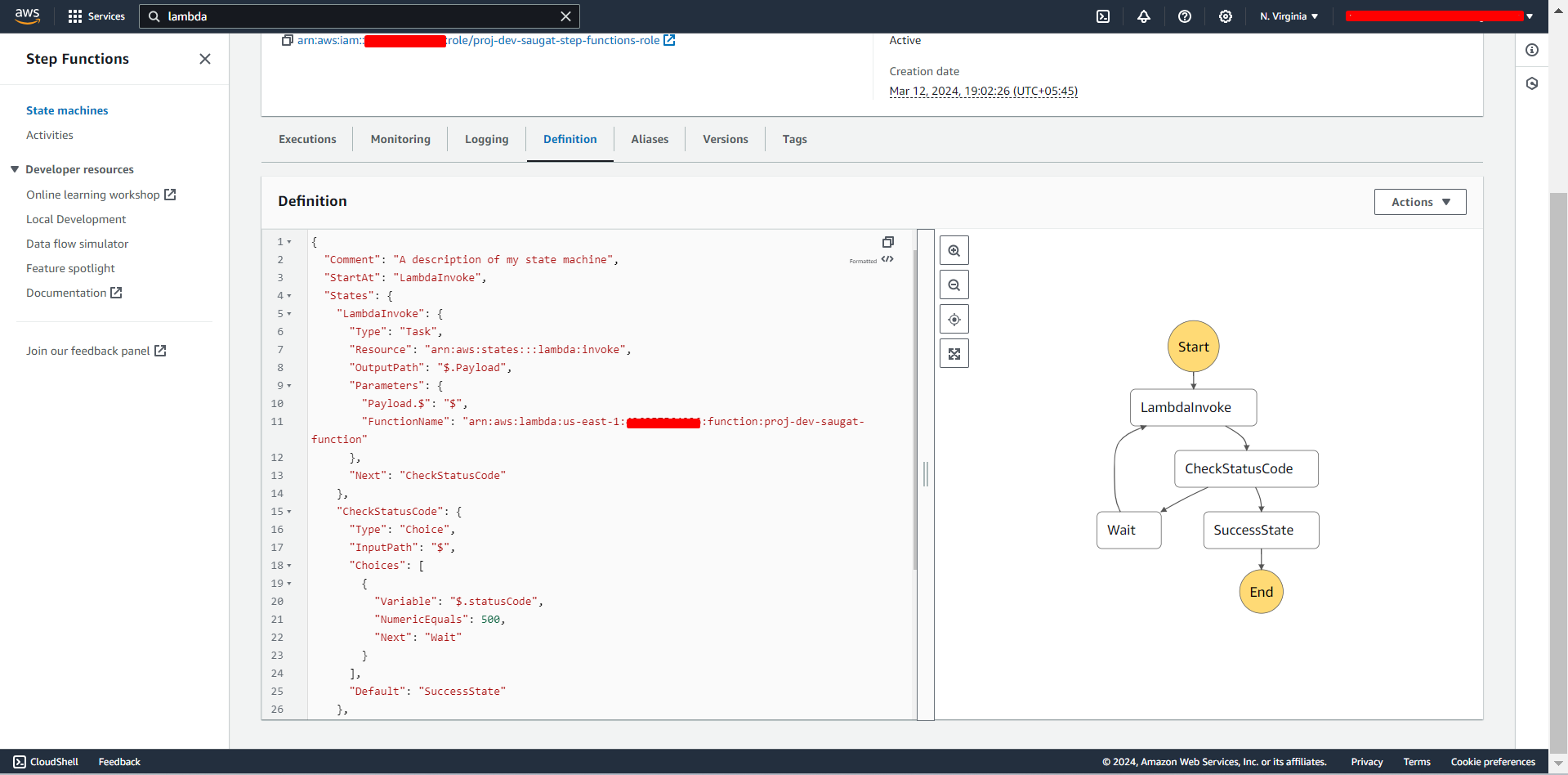

Lets take an example of a Step Function state machine in AWS with below configuration. It starts with a LambdaInvoke state, which invokes a Lambda function. The output of this Lambda function is then checked in the CheckStatusCode state. If the status code is 500, the state machine enters a Wait state for 10 seconds before invoking the Lambda function again. If the status code is not 500, the state machine transitions to the SuccessState and completes its execution.

{

"Comment": "A description of my state machine",

"StartAt": "LambdaInvoke",

"States": {

"LambdaInvoke": {

"Type": "Task",

"Resource": "arn:aws:states:::lambda:invoke",

"OutputPath": "$.Payload",

"Parameters": {

"Payload.$": "$",

"FunctionName": "${module.lambda.function_arn}"

},

"Next": "CheckStatusCode"

},

"CheckStatusCode": {

"Type": "Choice",

"InputPath": "$",

"Choices": [

{

"Variable": "$.statusCode",

"NumericEquals": 500,

"Next": "Wait"

}

],

"Default": "SuccessState"

},

"Wait": {

"Type": "Wait",

"OutputPath": "$.event",

"Seconds": 10,

"Next": "LambdaInvoke"

},

"SuccessState": {

"Type": "Succeed"

}

}

}

Here is the link of the project: https://github.com/saugat86/terraform-aws-sfn-project

Project Structure:

The file structure described in this project is organized as follows:

.

├── LICENSE

├── README.md

├── infra

│ ├── dev.tfvars

│ ├── main.tf

│ ├── outputs.tf

│ ├── src

│ │ └── main.py

│ ├── step-fn-file

│ │ └── sfn.asl.json

│ ├── variables.tf

│ └── version.tf

└── modules

├── kms

├── lambda

├── s3

└── step-function

Here’s a brief explanation of the directories and files:

README.md: This is a markdown file where you can provide documentation for your project.infra: This directory contains the main Terraform files (main.tf,variables.tf,version.tf) that define the infrastructure of the example project. It includes adev.tfvarsfile to set environment-specific variables and anoutputs.tffile to define the outputs of the Terraform code.infra/src/main.pycontains the primary python code for lambda function for this project andinfra/step-fn-file/sfn.asl.jsoncontains the definition of the step function for the project.modulesdirectory: This contains separate terraform modules for different components of the project such askms,lambda,s3, andstep-function. Each of these directories contains Terraform files (main.tf,variables.tf,outputs.tf,versions.tf) specific to that component. Some components also have additional Terraform files for specific functionalities, such asbucket-policy.tfandconsumer-policy.tfin thes3directory.

Infrastructure Deployment:

$ git clone git@github.com:saugat86/terraform-aws-sfn-project.git

$ cd infra

$ terraform init

$ terraform plan -var-file="dev.tfvars"

$ terraform apply -var-file="dev.tfvars"

cd infrais a command to change the current directory to theinfradirectory.terraform initis used to initialize a working directory containing Terraform configuration files.terraform plan -var-file="dev.tfvars"is a command that creates an execution plan. It determines what actions are necessary to achieve the desired state specified in the configuration files. Thevar-fileflag is used to provide a file for Terraform to retrieve variable values from when executing. In this case, the file isdev.tfvars.terraform apply -var-file="dev.tfvars"applies the changes needed to reach the desired state of the configuration. Thevar-fileflag serves the same purpose as in the previous command.

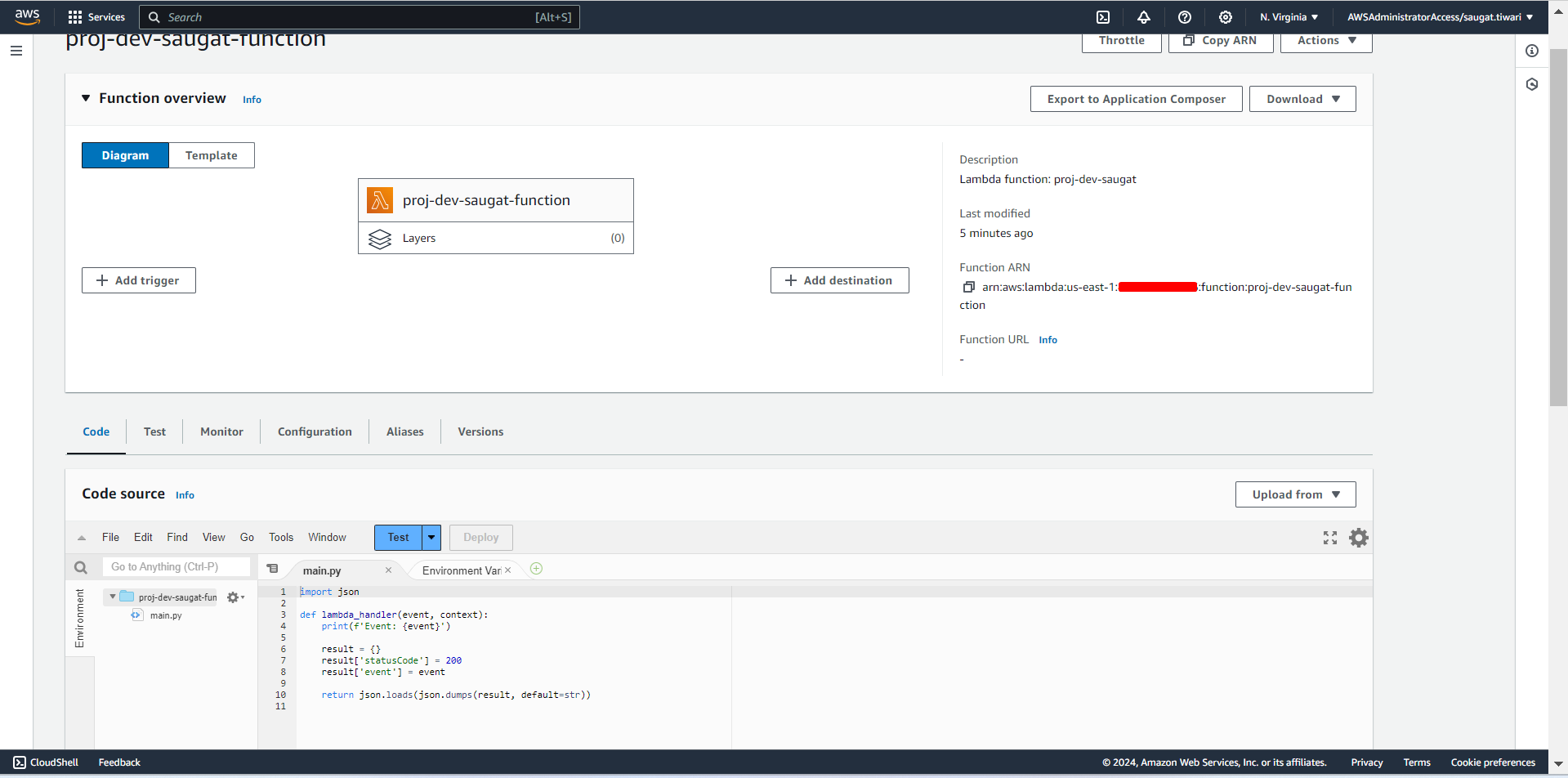

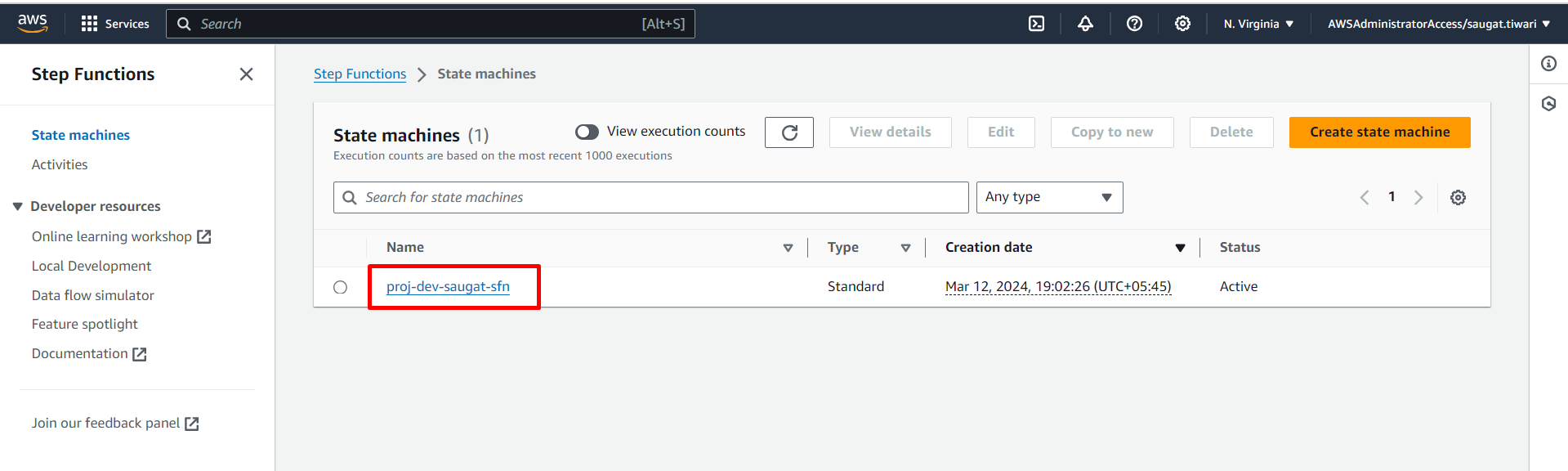

Verify the infra on AWS Console

Once the Terraform infrastructure is applied, verify it on the AWS account:

Next, check the Lambda function under the Lambda console.

Now, proceed to the Step function console where you’ll find your state machine.

Let’s examine the definition of the step function.

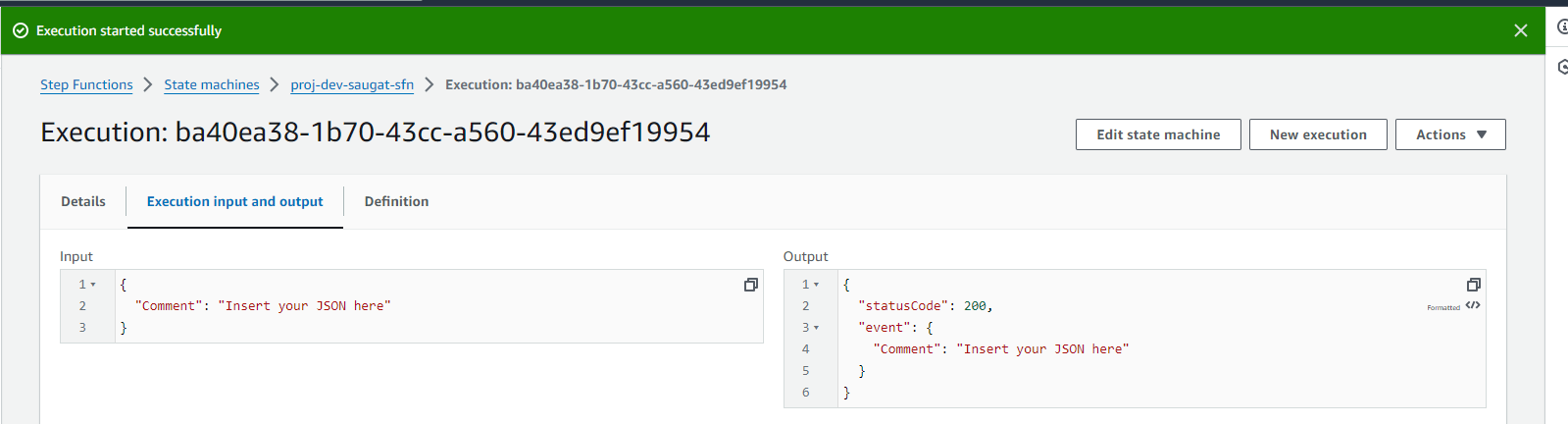

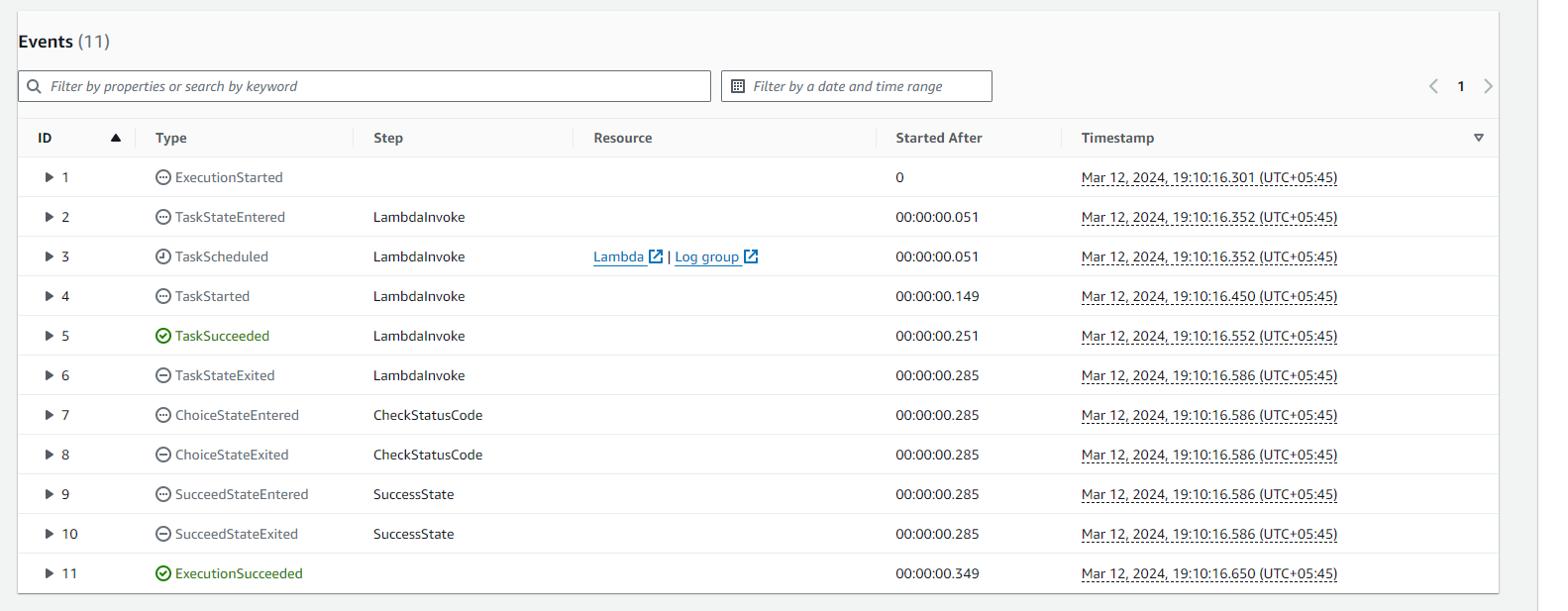

Let’s execute this manually. The following events and output will occur can see on following snapshots:

Conclusion:

In conclusion, AWS Step Functions provide a reliable, scalable, and visual way to coordinate complex workflows. By integrating with other AWS services, they support the creation of a wide variety of applications. This blog has provided a detailed guide on how to deploy AWS Step Functions using Terraform, highlighting important best practices and providing a real-world example.

As we have seen, following these steps and practices will ensure optimized performance, enhanced security, improved operational efficiency, increased reliability, and controlled costs.